Hi, I'm Alexis Gallagher. I've been an independent iOS developer for a long time, but I recently joined a fashion tech startup. So if anyone else here is interested in augmented reality, custom manufacturing, and fashion, please reach out to me and I'll tell you about that.

But right now, I'd like to talk about value semantics.

Now, I don't know if you remember, but I feel like 2014, 2015, and 2016 — these were the bumper crop years for talks on value types. There were at least half a dozen of them. Apple's done three of them, if you count the last WWDC. There was a really good one here by Andy Matuschak and a couple others.

So why do we need another talk on value types? Fair question. I'll give you two reasons.

One reason is you need to understand value semantics to understand value types. Because one thing I noticed in almost all the value type talks is that about midway, they would all use the phrase "value semantics".

And then they'd never define it! Then they'd sort of keep marching forward.

So it got stuck in my mind as this puzzle: "What is this thing that they need to keep bringing up, but it's never really explained?"

I also noticed that the term value semantics came up at exactly the point where those talks got complicated. They'd start by saying, "Hey, value types, relax, you know what they are. It's like integers. You've always dealt with integers. Here, you save an integer. Here, you check an integer." You're thinking, "Great, value types, I'm rocking it, I understand it."

And then in the middle there — "And this is the copy-on-write optimization to get at deep storage" — and suddenly it becomes a much trickier talk!

And it was always at this tricky point when value semantics would pop up, and then disappear, like a monster, jumping out from behind a tree at night and then hiding again.

So I formed the idea in my mind that these things were connected — that if I understood value semantics, I'd also understood why all these talks got complicated at the same point.

And I think that's actually true. I think understanding value semantics in a clear way helps you understand value types much better, and gives you a much cleaner frame for understanding everything connected to value types.

The second reason value semantics is important, is because they're updating Foundation to use it everywhere. So it's no longer a minor quirk of the Swift language. It's part of understanding Foundation. It's part of understanding the core types that we use to build all kinds of apps.

That's why value semantics is worth examining, even if it's usually mentioned in passing.

Value semantics is the thing that's been in the center of the room that we haven't pinned down. But if you pin it down, everything else just fall into focus.

So now to illustrate why I feel like it's not pinned down, let's have a … pop survey!

Just four questions. I swear there are no wrong answers here. Because almost every possible answer is right, according to some perspective.

-

Who here would say that all structs are value types? Are structs value types? Are all of them value types?

Okay, so it's about 65% of the room.

-

What about all classes? Are all classes value types? I define something with class. Is that a value type?

Audience: No, it's a reference type.

Okay, he's saying no, it's a reference type.

-

All right, now, what about: Do all structs have value semantics?

I've got a firm yes in the middle. Let's see how many people are just not answering. Who here says all structs don't have value semantics? I've got one other person.

So one person says yes. One person says no. And for everyone who's watching from the camera, about 58 people have not raised a hand at all!

So — "value semantics" is a bit ambiguous!

-

Next question would be about classes. Do classes have value semantics?

I think we've got the same kind of answer.

As you see, value semantics is not very frequently defined.

And even when you actually dig into the literature, all sorts of answers are viable.

So my agenda for this talk is:

-

First, I want to review value type ideas that we've encountered before, but also get into value semantics in the context of that review.

-

And then I want to propose a definition for value semantics that I hope makes it something you can grab and use and really understand.

-

And last, I'm going to offer a recipe. If you want to define your own type, and you want to be sure it has value semantics, here is a checklist style recipe for doing it so you know that you've got a value semantic type.

First, let me review it.

The easiest way to start getting into value types of value semantics is not with an abstract definition but with a game.

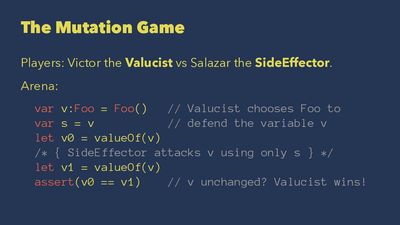

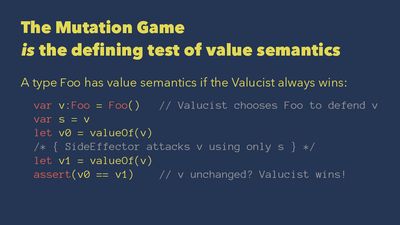

I've invented this game and I call it the Mutation Game.

The mutation game is played with two players.

We have Victor the Valucist. He's the good guy.

And then there's Salazar the Side-Effector. He's the bad guy.

And the game is this. Victor the Valucist is going to choose a type Foo, and is going to initialize a variable v with that type Foo.

Then Salazar is going to try to attack that variable. He's going to try to change the value of that variable.

But here's the rule. Salazar doesn't get to touch the variable v directly. All he gets to use is another variable s. That's Salazar's attack variable.

Salazar also gets to define a pure audit function that's used to measure the value v. (It needs to be a pure function of v. He can't just return a random variable.)

Here's the arena of play.

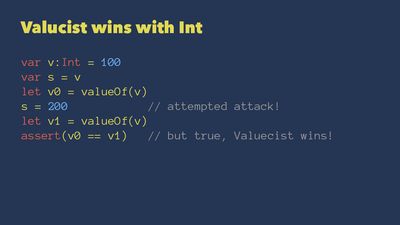

var v:Foo = Foo() var s = v let v0 = valueOf(v) /* Here Salazar attacks v using only s */ let v1 = valueOf(v) assert(v0 == v1)

This should look pretty similar from talks we've seen on value types.

In the beginning, the Valucist Victor defines the variable v, type Foo. And then we get this important statement in the middle, var s = v. The value of s has now been determined by the value of v.

We take the initial value of v with the value of function, and then save that into v0, to be able to see later if v changed.

And in that blocked-out comment, that's where Salazar can do anything he wants to do, as long as he's only using the variable s. He can't touch the original v.

Then we take the value of v again, so we can see, has it changed or is it unchanged?

If it hasn't changed, then Victor wins. If it has changed, then Salazar wins.

Now, I'm not going to lie to you. This talk is a bit abstract. So to try to liven it up, I've tried to make this game concrete.

These are the two characters.

There's Victor the Valucist, a hero of virtue.

Mayybe you can see he's marrying someone, whom I just cropped out of the frame so you can only see her hand grabbing his arm. He's a good guy.

Then there's Salazar the Side-Effector. He's much more dramatically dressed, sort of seductive, but evil. He's tempting us, but we shouldn't be tempted by Salazar, the Side-Effector. Thank you, Alan Rickman, rest in peace.

With these characters in mind, let's play out the game a few times.

What happens if Victor the valucist decides to play with the type Int?

If Victor plays with Int, then Victor the Valucist wins.

v is unchanged. s cannot touch the value of v.

Why? Because Int is a value type, right?

And what does that mean? When we say something is a value type or a reference type in the context of Swift, really, the thing that we're talking about is what I call assignment behavior. This means what happens when you assign to the variable.

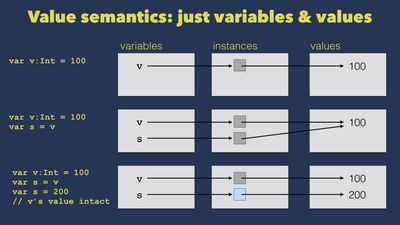

This is the magic picture that makes the assignment behavior of value types very clear.

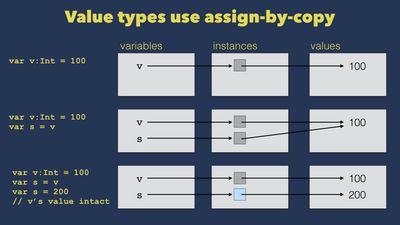

Value types use assign-by-copy.

We start with a declaration that v is a variable (it's in the set of all variables there). It's pointing to a particular instance. And that instance has a value. The instance has the value of 100.

Then when we assign to a new variable s, assign-by-copy means it behaves as if we are creating a copy of the instance. That's why we have two instance boxes in the second row here.

The new variable s is pointing to the new instance, which was copied from the first one.

But that new instance, maybe because it's made of the same bytes, it points to the same value. It also points to 100.

So whatever happens later, whatever Salazar does with the variable s, it's only going to affect that instance which the variable s is pointing to. He can't do anything that changes the value of v.

The value of v you get by taking the variable v, going to the instance that it points to, and then looking at the value which that instance carries.

This is the way to think about assign-by-copy.

And this is why for a type like Int, the valucist always wins.

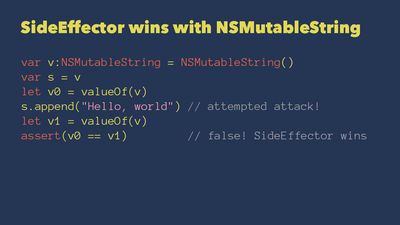

But what if Victor is relaxed and naive and trusting after his wedding day and instead of Int he chooses NSMutableString?

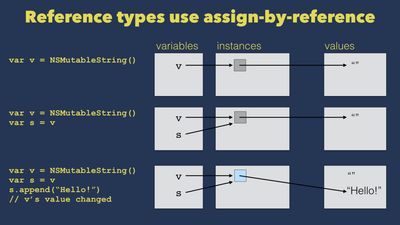

Well, then he's totally hosed because this is a reference type. And reference types use assign-by-reference.

As you can see right here, looking at the same kind of diagram, the variable v points to the instance, which is an actual mutable string object. The value of that mutable string, it starts out as an empty string.

But since NSMutableString is a reference type, when you say var s = v, what you're really saying is "I want to have a new variable s, and I want it to point to the same instance as v".

So v and s now share a common instance.

Then what can happen is that if Salazar makes a change to the actual instance, by mutating that mutable string and changing it to "Hello", so that its value is now "Hello", well, then the value of v is "Hello", and so is the value of s.

So Salazar, just by grabbing hold of s and using it as handle, was able to get in there and destroy v. He changed the value of v; he changed what we wanted it to mean.

So what? What's the big deal here?

This is probably a familiar material. But this gets at the benefits of value types.

Value types prevent unintended mutation. If I don't want to worry about v getting messed up, and v is a value type like Int, for instance, then I don't need to worry about someone who's holding on to s doing something that messes up v. So that's good.

Why is that good? It makes it easier to reason about what you're doing. You just look at what happened to v. You don't need to look at something else that might have this indirect spooky effect on v. It makes it easier to reason locally, since you look at just a little bit of code.

Or, "It makes functions great again" is another way to think about it.

If I have a function and it takes an Int and it returns an Int, and it's a well-behaved function, then I don't need to worry that under the hood, it's doing something to the Int that I handed in to it. Because the rule for the variable that's used in the body of the function is the same kind of assignment rule that's used for assigning a variable within a block, within a scope of code.

All this also helps with thread safety, because you don't have to worry about something else changing something that you didn't expect, and possibly changing it in the wrong order.

So far, so familiar.

There were a lot of great talks on value types. Here's a list of them.

But this has been the easy part of the value type talks, before they start getting a bit tricky.

So what else is there to say?

Should we always just use struct so we want to have safety, and then we can use classes when this kind of safety isn't important to us? Is it as simple as that?

Not exactly, because what I've described as a benefit of value type is not a benefit you always get from a value type. So saying it's the benefit of the value types is in fact a bit misleading!

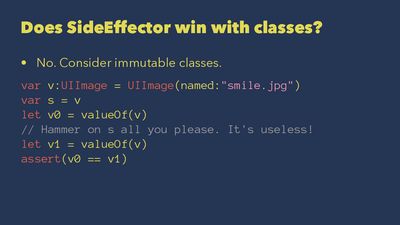

Because, for instance, one way I can get this benefit is by using a reference type that happens to be immutable.

Consider what happens here with UIImage.

Here I define a UIImage that represents a smile, and Salazar cannot change it.

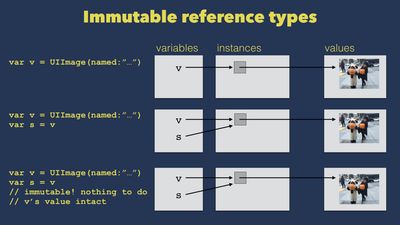

Let's look at it in detail with the diagram.

I define a UIImage that represents, say, adorable children holding pumpkins.

Then I define a new variable s, that's pointing at the same UIImage instance.

Now we might worry that Salazar could grab s and then do things that would change the image. Could he go change the colors on it?

No actually, he can't do that. Because UIImage has been deliberately carefully defined to be immutable.

If you look at all the properties on UIImage, they're all read-only. There's no function you can call on UIImage that's going to change the image inside it. There's no property you can set on UIImage that's going to change the image itself.

As a result, even though these variables are sharing content at the instance level, even thought they're sharing the same instance, it behaves exactly as if they're totally independent, because you can't change one from the other.

So this might seem to be one of the benefits of value types, that I don't need to worry about Salazar. But the benefit here is actually for accruing to reference type, because that reference type is immutable.

Well, okay, you might think, "So maybe some reference type have this benefit. But value types -- I can still go with a value type if I want to be safe."

No, not really!

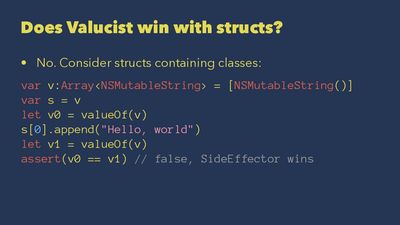

Because I can create a value type like Array that contains inside it a reference type, like an NSMutableString.

So in that case, we still have this problem.

I've got a value type v, I assign v to s, then take the value of it.

But now within the Array, I can go in there and grab the first component, which happens to be mutable, and change that.

And now, if you take the big picture, the value of the array has changed, because the value of the array should be defined by the value of everything in the array.

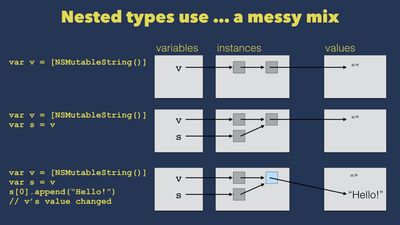

The diagram of it looks like this.

Variable v is pointing to that first instance box, which is the Array. And that instance, that Array instance is pointing to the second box, which is the NSMutableString. And when I go var s = v, sure, okay, it is as if assign-by-copy is creating a new Array. but that new Array instance is pointing to the same mutable string instance.

So I have exactly the same problems as before.

So this independence which you might think of is the benefit of value types — well, sometimes I get that benefit with a reference type! And sometimes I don't get that benefit even with a value type.

It's a bit puzzling. And it's not just a problem with Arrays. Really, it's for any value types.

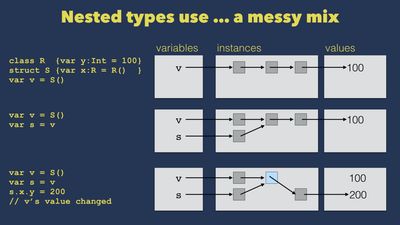

Here's the same thing with a struct.

If I define a struct, and it has internally a property that is a reference type, you get exactly the same issue, as you'd see with an Array.

These are sometimes called nested types. And the behavior they exhibit is messy.

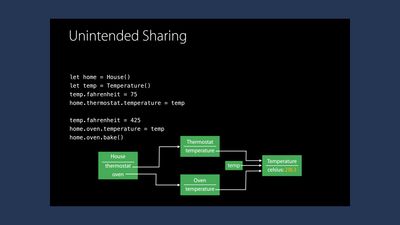

Now, Apple themselves in their talk, talks about this problem, as they're getting into the benefits of value types.

They describe it as "unintended sharing". But it's not always unintended!

This is where their talk starts to get complicated.

Sometimes, you want to do this, because you want secretly to maintain common storage for efficiency reasons. But then you don't want that secretly maintained common shared storage to mess up the behavior of your types, so you put in special tricks to be sure that as soon as the user would change something that's shared, then you stop sharing it at the last minute.

This is the "copy-on-write optimization".

They go into this in the 2015 talk, and it's a great talk. It's worth watching.

But to come back to the big question here about the benefits we're trying to achieve -- if we're the valucist, we want to know "what kind of type can I use if I want to win the mutation game?"

We still need an answer for that.

And we've seen the answer is not just "value types" because clearly a nested type fails.

And the answer is not just "avoid reference types" because sometimes a reference type works.

So what is the thing that wins the mutation game?

And this is actually the definition of value semantics.

The kind of type that wins the mutation game is a type that has value semantics.

And something like Int has value semantics. But you can also make more complicated things that have value semantics.

So what we're talking about when we talk about these benefits is whether a type has value semantics. We're not talking about whether it's a value type.

So having set that up, let me define it.

The reason I think it's essential to have a definition is because what's confusing about many talks on this issue is that they lack a definition.

They start by saying, "value types — easy, they behave like Int."

But then when they get to the part where they start talking about avoiding unintended sharing when you define nested types, they change course and say, "Careful, we need to be sure our type behaves like a value type."

But what does "behaves like a value type" mean? My nested struct is a struct. So it is a value type. How can it not behave like one?

If you never offer a definition of "behaves like a value type" clearer than saying "behave like integers," then you never notice that your vocabulary is missing a concept. This missing concept is the concept of value semantics as opposed to a value type.

So it's critical to define it.

What's the definition I'm offering?

So my first definition is an operational one: the mutation game is the defining test of value semantics. Simple as that.

So I would say

A type `Foo` has value semantics, if by using `Foo` the valucist will always win the mutation game.

The thing I like about this is it's very easy to think about because it's adversarial. Your opponent defines you a type, and you can just imagine code like this and think, "well, is there anything I could do that would mess them up?"

This definition is not too abstract.

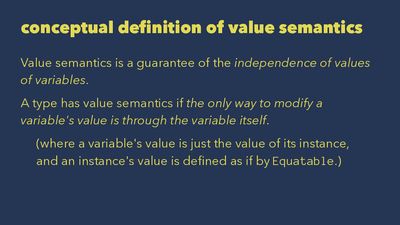

But let's say you want a more abstract statement of it.

That can be good for getting at the essence of the benefit here.

Here's a conceptual definition which is equivalent: value semantics is the guarantee of the independence of the value of variables.

And independence doesn't mean structural things. What we're talking about is "can one thing affect another, can one thing depend on another?".

So:

A type has value semantics, if the only way to modify a variable's value is through the variable itself.

If the only way to modify a variable's value is through the variable itself, then congratulations, you have a variable that has a value semantic type.

(This is with the parenthetical proviso, where we understand that when we talk about a "variable's value" what we mean is the "value of the instance the variable refers to". Strictly speaking, it's better to say that only instances have values and variables are just referring to instances.

And what is the value of an instance? "It's the thing that you're trying to compare when you define Equatable" would be a short way to define it.)

I think this is an important property!

I would love it if when I went and looked at documentation that Apple or anyone else provided for a type, it would say @valuesemantic, because this is what you want to know when you're about to use a type. What can I rely on in terms of its behavior? Is it a value semantic type or not? Whether it's a value type or a reference type is really an implementation issue. Value semantics is the thing that matters from the point of view of the type's user, I would argue.

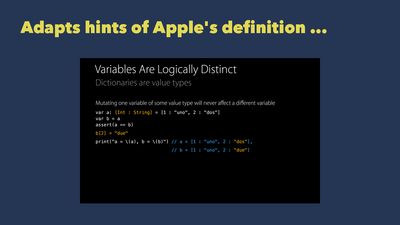

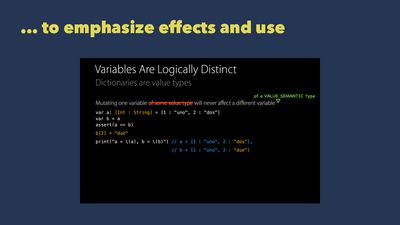

And I'd say my definition is not completely crazy. I'm really adapting hints or unpursued angles that are in Apple's own definition.

At one point in the talk that talks most about this, which is the 2015 WWDC talk with Doug Gregor and Bill Dudley, they describe it in a few different ways.

One thing they say is "variables are logically distinct."

I don't like that formulation because variables are always logically distinct, just because they are different names, different identifiers.

What I think they are trying to get at there is "structurally distinct".

But they don't actually mean that the variables are structurally distinct, but rather that the storage for them is structurally distinct.

But that also can't be exactly what matters because, again, you can have immutable variables which are not structurally distinct, since it's the same immutable instance under the hood.

What really matters is "can one thing affect another?"

And they're kind of hinting at that when they say, "mutating one variable of some value type will never affect a different variable".

But, first of all, as they say it there, it's clearly false. Because as we've seen with an Array, a struct, if you have a nested type, then mutating one variable of a value type will affect the other one. So I think they mispoke.

Fundamentally, the important thing is not whether your variable can affect something else. The important thing is whether you are immune to being affected by other things.

So I'd say that the formulation they have there in the middle is about right.

But I would offer a modest amendment, which is this:

mutating one variable will never affect a different variable of a value semantic type.

That's what we're trying to get at here.

The type is value semantic if it's immune from side effects produced by other things, not if it's guaranteed not to perpetrate side effects on other things.

So I think this is the right thing to focus on when you think about what value semantics should mean.

I think the definition I'm offering is consistent with whatever everyone else has said. But I like the way it focuses things a little more.

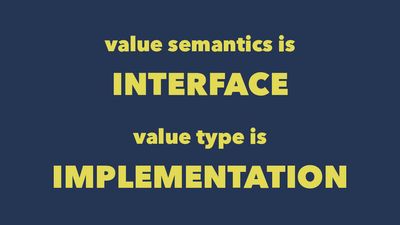

Or just to offer a summary of it: value semantics is about interface, whereas value type versus reference type is about implementation.

Because again, value semantics is what you want to know if I give you a type and you want to know how it's safe to use it.

Whereas whether it's value type or reference type -- this is really this implementation detail about what's happening with memory under the hood. And I suggest we try to rise up to a higher plane, when we can afford to in terms of performance and other engineering restrictions.

Or to put this more grandly, I'd say the value of value semantics is that value semantics let you dream in values. They provide a simpler way to think about it.

And let me offer an illustration of what I mean by that.

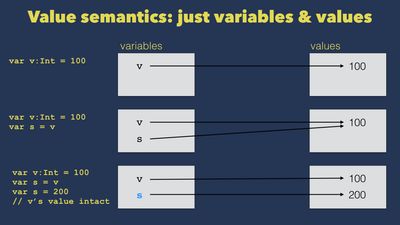

So far, all of the charts I've offered have three columns, and they depict the relationship between variables, instances and values.

This is the way you need to think when you're dealing with things that don't have value semantics. You look at these variables and you need to have a model in your mind of all the little bits and pieces that they touch, and if a thing that was touched by many things got touched and got changed.

But the whole benefit of value semantics is that it allows you to forget instances exist, because our definition of value semantics doesn't actually have the concept of an instance or the concept of reference in it.

It only has the concept of variable and value. "A variable has value semantics if the only way to affect the value of that variable is through that variable."

There's a whole concept of reference instances gone. Because when you have value semantics, you can just use this diagram as your mental model.

I have a variable and it has a value.

Values don't change. They're sort of immutable, perfect, ideal things, existing in the mind of God, and variables are just names for them.

It's a much clearer way to think about it, I think.

Part of the reason I think it's not usually framed in this way, is because the word "value" is used in very different and inconsistent ways in computer science.

This is especially true if you compare the way the word value is used in C, and C++-derived languages, like Swift, and the way value is used in functional programming languages, like Clojure, SML, or OCaml.

Whta you see is that the C- and C++-derived languages have a machine-oriented view of value.

If you go digging through the C++ spec to find out where they define the word "value", you go through footnote, footnote, footnote, and then you find a footnote saying "we mean what it means in the C spec."

Okay. Then you go to the C spec, and you go dig, dig, dig, and that also bottoms out in a footnote. And the final definition of "value" is "the meaning that's attributed to an area of storage".

So you find that the idea of storage is still what's at the bottom.

And it's weird that these documents, the C and C++ language specs, that are full of lovingly detailed intricacy about the nature of storage and bytes, at the end, they just kind of wave their hand and say "it's the meaning you attribute to it!"

It's like they kind of ran out of steam when they're trying to explain it.

Whereas, by comparison, if you look at the way "value" is used in the more mathematically-derived or functional oriented languages, you find that "value" is more like "the number five, as a mathematically ideal abstract object."

This is also why I'm avoiding the term "pass-by-value," which I think we've all encountered in programming language textbooks, because "pass-by-value" doesn't actually say what sense of the word "value" you mean.

And it gets quite messy because you'll have a reference pointer and say, "Well, it's passed by value, but the value is actually a reference."

In my opinion the whole beauty of Swift and more abstract languages is that they hide the raw mechanics of pointer reference from you. So I think using terms like "pass-by-value" is dragging us back into a more confusing representation that we should only work in when you need to be working at that level of representation.

And all the benefits of values that people are pitching, where they talk about immunity from side effects and independence and easy to reason about, these are the benefits of logical values. These are not the benefits of value as abstractly defined meaning around storage.

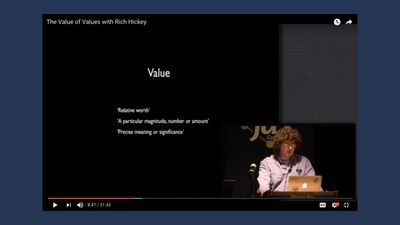

There's a great talk by Rich Hickey, the author of the Clojure programming language called "The value of values."

I highly recommend everyone go out and see it and watch it a few times. It takes a while to appreciate.

I feel just like my old English teacher would read Moby Dick every year. I feel like every year I watch it again. And then I understand more subtleties in it.

But I think a lot of these benefits are actually come from the mathematical clarity of that kind of notion of value.

So I've offered my slightly adventurous (but I would argue totally consistent with everything that's come before) definition of value semantics.

Here are some consequences of it.

First, I would say I'm putting my flag here into the ground: immutable reference types have value semantics.

This makes sense by any reasonable definition of value semantics. Yes, they're reference types, but they have value semantics in the sense that they behave like values and someone else can't mess them up.

Second thing -- if you think about it, types have value semantics not as an absolute matter, but only relative to an access level.

Why?

Because a variable has value semantics if the only way to modify the value of the variable is through that variable. Well, what you can do to the value of a variable depends on where you're sitting, depends on your access level.

So if a type has a fileprivate access modifier on something related to the type, then I can access it if I'm running code that was defined in the same file. But if it's not fileprivate, then I can't access it from outside the same file.

So if you think about it, what that means is that the type might have value semantics from one access level, but not from another. Because from one access level, you can't mess it up. From another access level, you can.

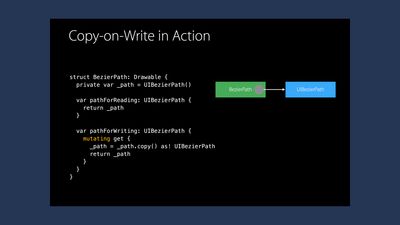

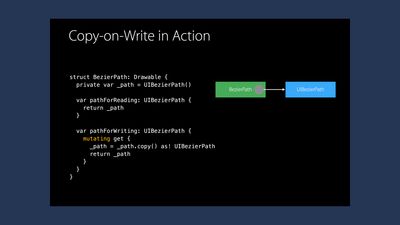

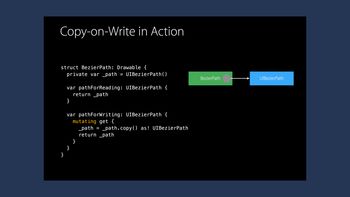

This is really already implicit in the copy-on-write optimization example that Apple uses and that everyone uses when they're talking about more elaborate types that want to maintain value semantics, while also having shared deep storage.

They're using the access level here to control it. So if I could get in there and mess with the private var, the underscore path property, well then I would be spoiling the value semantics of it.

So that's another thing to consider, that the types have value semantics relative to an access level.

Now, that's some kind of summary on what are the consequences of value semantics. And I hope this definition is one that you can grab.

I'd now like to point out how another nice thing about this definition is it offers you a fairly simple recipe.

If you're defining a type, and you want to ensure it has value semantics (let's say you're hacking on the uncompleted implementation of Foundation and you want to have a value semantic type), you need a recipe for it.

You need rules you can apply when it's 11 o'clock at night, and you're a bit tired.

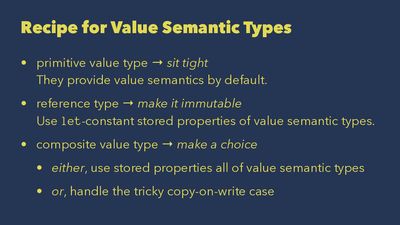

Here's the recipe for value semantic types.

Case 1: let's say you're working on a primitive value type like Int.

In that case, sit tight, you're done. Those types all have value semantics by default.

Case 2: let's say you're working on a reference type -- so something that's defined using class, which uses assign-by-reference, assignment behavior.

In that case, make it immutable. If it's immutable, it will have value semantics. How do you make it immutable? You can use let constant properties. So all your stored properties need to be let constants. And those properties themselves need to have value semantic types.

(You can see that one of the reasons I think this concept is coherent, is it recurs in this way, which gives you a nice structure.)

Case 3: let's say you're dealing with a complicated case of a composite value type.

Well, in that case, you have a choice to make. Either use stored properties that all themselves have value semantic types, and you're done. Or, if you want to do the complicated thing with shared deep storage, then you need to handle the tricky copy-on-write case in the way that's been described.

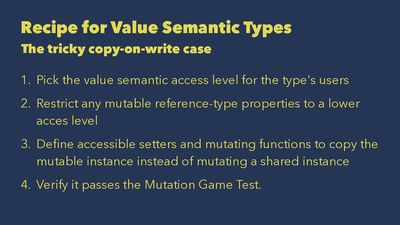

And just to spell out the instructions on how to handle the copy-on-write case, here's how you do it.

First, pick the value semantic access level for the type's user. It might be public, might be module, depends what you're doing.

Then, restrict any mutable reference type properties to a lower access level.

Then, define accessible setters and mutating functions to copy the mutable instance, instead of mutating a shared instance.

Finally, if you want to know if you've done it right, you can always just go and play out the mutation game as a test. Can Salazar mess this thing up? If he can, then you haven't quite finished your job.

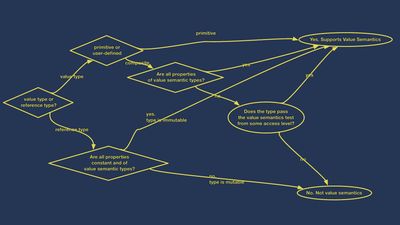

You can also define a flowchart, which is the questions you'd ask yourself to determine if the type you're looking at is a value semantic type by going through the recipe steps that you'd provide.

To some extent, this might even be determinable through automated analysis of types. I think that would be quite handy.

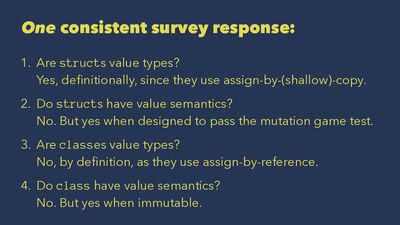

So to loop back and consider the pop survey questions that I asked.

You can see how there's ambiguity because of the different ways people have used these terms.

But here is one consistent set of answers, one consistent interpretation:

-

Are structs value types?

Yes, by definition, because they use assign-by-copy.

-

Do structs have value semantics?

In general, no. But yes, when they're designed to pass the mutation game test. There's a couple ways you can do that.

-

Are classes value types?

No, by definition, because they use assign-by-reference.

-

Do classes have value semantics?

In general, no. But yes, when they are Immutable, and there's a definition of how you make the type Immutable.

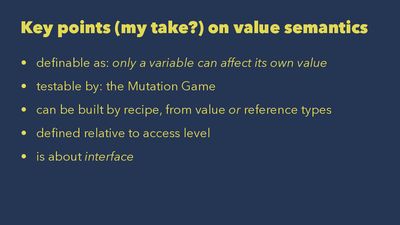

To sum all this up, these are the key points.

What is value semantics? It means that only a variable can affect that variable's value.

How do you test it? You can test it with the mutation game.

Value semantics types can be built by following this recipe in a fairly straightforward way, and you can build them from value types or from reference types. Don't let people lie to you about that!

Value semantics is defined relative to an access level. It's not absolute property.

And fundamentally, value semantics is about interface. Value semantics is what you should care about as a user. If I give you a type and you need to use it and I tell you it's a value semantic type, I've told you much more useful information than if I told you it's a value type or a reference type.

Finally, I just wanted to acknowledge discussions I had with other people that helped me think about this and helped me make it more clear. And if what I've said here is goes off the cliff and is not quite right, then that's definitely my fault, not theirs.

But that's what I have to say about value semantics. I hope that makes some sense!

Questions & Answers

I think you touched on something earlier when you talked about immutability enhancing thread safety. And listening to your talk and looking at these language features of Swift, we had an earlier talk where someone talked about using Swift on the server. So I don't know why but I keep thinking of the Erlang Virtual Machine. And immutability, what they've done with the Erlang Virtual Machine language has shown the superiority of immutability when it comes to handling massive numbers of concurrent connections reliably. So will Swift ever approach that? Probably not. But it's compiled, it's really fast. Erlang is also compiled. Anyway, I just couldn't help thinking about Erlang and I think you inadvertently touched on the superior nature of Erlang when it comes to doing concurrent, reliable servers, because of the immutability. And I believe they have kind of a write on copy type of mechanism.

I think there's definitely a connection here. And I'd say that the concept of value semantics is a little more inclusive than immutability. Immutability is one way you get it, but—

With immutability, you're kind of guaranteed it.

—yeah, with immutability, you're guaranteed it, but there's still some kind of mutation that happens. And usually (I'm more familiar with Clojure, because I use that for building and shipping systems), and in that language, the primitives that you're given tend to be immutable. And then there's very controlled mutation around how you refer to them. Swift also gives you this very controlled mutation, but it's concealed a little bit in the way it works when you make something mutating. I would say that, I think the copy-on-write optimization is a performance optimization to maintain value semantics while under the hood you're doing this stuff to keep storage cheaper. It makes copying cheaper.

But the functional programming languages like Clojure, maybe like Erlang as well, they'll do much there. Copy-on-write is the first and most simple optimization of that kind that you can do. There's this whole area called persistent data structures. It's a book by Okasaki. It's quite cool if you're interested in it.

And you can build data structures that do much more elaborate and fine-grained kinds of structural sharing of the deep storage while maintaining an external value semantic interface.

And actually, I think it would be great to implement those in Swift, and they would be useful on the server, and you would get very good performance with them. So I think it's—

Potentially it's like more of a general purpose language that can be mutated and manipulated these various ways, like you've suggested. But I think with that one particular use case of having a massively concurrent reliable server. I think that game has been, that battle has been won by the Erlang Virtual Machine, because that's what they tuned it for.

—right. It also has a error handling model that's very well thought of.

Yeah. The supervisor and restarting things pretty cool. Yeah. Thank you. Great talk.

Thank you.

Where do you think this, is there a framework or something in this structured to help people get this better? Has anybody written any excellent structures or frameworks to use these things?

Well, I think that we're already using these things all the time.

Yeah. But I mean, consciously and best practices.

No, no.

And I find that a bit puzzling to be honest. Because I really do think value semantics is more important than value types, and that it's the thing that matters when you're deciding how you can use a type safely. Obviously, some people have an excellent working understanding of it, because all of the people at Apple and elsewhere are very carefully building types that have these copy-on-write optimizations while carefully maintaining value semantics. But then the whole term "value semantics" is rarely used and not used consistently, even though that's the thing people are struggling to maintain and which they do see the value of.

So why don't we talk about it more? Yeah, I don't know. I think it is partly because of these different ways of using the word "value."

And then people have this idea of pass-by-value from computer science class. And that idea just confuses them and the way they think about it.

But I think fundamentally, this is a simple idea. All it means is I have a variable, nothing can change it except using the variable. It's so simple. And so why isn't it just put this way? I don't know

Yeah. So yeah. But great presentation.

Thank you.

Thanks.

I'm not sure this is totally relevant, because it goes into the implementation. But for mutating get, how exactly does that work? Because let's say some struct that I'm using has a NSMutableString, and I use a regular get, and I have a hold on that NSMutableString, and then I try to mutate it, it's not going to go to the mutating get. It's just going to, I already have access.

So you're asking about the mutating get that you see in the copy-on-write optimization example?

Yeah.

Yeah. So let's look at that. I see they do it here in a somewhat indirect way.

But basically, the trick is, and here they're doing it by defining kind of path for reading and path for writing, which I think obscures it. But basically, the trick is this shielding your mutable properties. So let's say I have a type Foo and I have a function in it, like updateCounter, let's say. And then internally, I also have, say, an integer x, and that's the thing that I want to have shared until I can't afford to share it anymore. The updateCounter function gets marked as mutating so the type system knows that it needs to treat this as a modification.

And then in the body of your function updateCounter, you check to see if the stored property x that you're hiding is being referenced by more than one variable. And there is actually a function called isUniquelyReferenced. So you can actually just write conditional code in the updater, that will check to see if it's being referenced by lots of people. So when you're doing this update, you can talk to the runtime that the language gives you and say, "Hey, wait a minute, is someone else also using this thing that I'm about to change?" And if it says yes, then you just create your own copy of it. And if it says no, then you don't actually need to bother.

So it depends on these special methods, which have names like isUniquelyReferenced, which you can use to check at the point of access if you're about to change something that someone else is also using. And then you hide it the moment that happens, so no one can ever tell that they were being shared all along.

That's used for all of these types.

So one analogy I think about is this. Let's say you go to a store that sells stationery, and it has all these little shelves and every shelf has a nice little notebook on it. And they all looks the same because they're selling a lot of these notebooks.

Then you walk up to the shelf and you're about to reach for one and write on it. But secretly, the shopkeeper is watching you. And the moment you grab it, the shopkeeper, faster than you can see, he goes and does this action behind the scenes. What is he doing?

Actually, you weren't seeing a bunch of different notebooks! The shopkeeper has one notebook behind the shelves. And then he has this system of mirrors. So everything that you thought was a distinct notebook was actually just a reflection of this one notebook. But the moment you're going to start writing on it, well, that's when his system would get messed up. So before you can actually touch it, he swoops in and puts a writable notebook in your hand.

And that's the one that you grab, and you write on it. And you're like, "Okay, great.", and you never realized that all along, you were lied to originally.

That's what copy on write optimization is. You've got all these distinct variables that behave as if they're pointing at distinct things, but actually, it's all mirrors. There's just one thing under the hood.

But as soon as you do something that would spoil that illusion by changing the one thing (because remember, if the shopkeeper didn't show up, and I just wrote on that notebook, then I would see my writing on all the reflections, if that was the original) — as soon as you're going to do something that changes the one thing, then the system does this quick swap so the effect is not visible. That's the essence of the copy-on-write optimization.

So then, when you make a type with copy-on-write, as the maker of that type, you need to keep the cognitive load of like, "oh, this is a reference or this is a value?"

Exactly, yeah. As the person who writes the type, you have to bear the cognitive load of this shell game you're doing under the hood. But the whole benefit of doing that, is so that someone who uses the type doesn't even know it works that way. And that's why value semantics is what's important, because the promise you're making to the user is that this type has value semantics. And then they don't need to know if you're doing funny business or not.

But if all you tell the person is that "the type's a value type," well, that doesn't mean anything. It could be a value type that behaves like Int or it could be a value type that behaves like an Array with NSMutableStrings.

So that's not information that I can use. What I want to see on the documentation that annotates the type is, does it have value semantics? I don't really care about this implementation stuff unless I'm doing something that's performance sensitive, where I actually need to know how many bytes got moved around.

And also, the Swift language itself is actually doing this copy-on-write thing under the hood all the time, even for the Ints, which is why I said in the beginning that value types behave, assign-by-copy behaves, as if the instance is copied, because that diagram I showed you in the beginning just to make the idea clear -- this one -- well, that's also a lie. Because secretly, the language at the runtime level might not be creating that instance copy until it needs to at the last possible moment.

But you see how I talk that ends up talking about copy-on-write can be confusing, because the real thing that matters is the value semantics, which copy-on-write provides. It's like talking forever about an implementation and not talking about why you bothered with it, like what it's going to give you. That's the whole point of a copy-on-write optimization is that lets you have the benefit of value semantics from the outside, while having the-

Flexibility and power.

— while having the reduced use of storage, and the fewer copying, reduced use of compute for copying, on the inside.

Awesome, thank you. That made sense.

Thank you.

Great talk. So I'm thinking sometimes protocols or generic types that are part of the inheritance tree can be used to bring in behavior such as this. Is that something that would be, do you have to, could you inherit this value semantics on types or even variables?

I'm not sure I know what you mean. So could you have value semantics as a property that you inherited from your supertype?

Or could you assign as a type?

Okay, yeah, I don't know. I don't know, I need to think about that. I can't think about how you would do that off the top of my head. But there might be some way in which you could genericize the copy on write mechanism, because that is sort of generic. And then you could have specializations of a generic type, where for instance, the example I gave of how you do a copy on write with the stored value or an int, doesn't need to be an int. It could be a T. It could be some generic type parameter that has constraints on it. So I suppose you could, in that way, have a value type where the kind of contents of it were generic, haven't thought about that much though. That's an interesting question. I think we're all done. Thank you.