Hello, everybody. I'm excited to be talking about this today.

This is a subject that I've been looking into further and further, and as I was saying to someone just outside, it feels a little bit like where there's a little piece of string, and you pull on it, and you're like, "Oh, I'm going to get to the end of this thing in a minute, and then I'll have my talk all together and I'll understand it, and it'll be clear," and then you're like, "Okay, okay, there's more string here, okay... Oh my God, where does this end?"

So I'm excited that I've had the chance to think about this, and I hope this talk makes the topic a bit clearer. The subject is "protocols with associated types, and how they got that way" — maybe, because Apple is secretive, so we can't be exactly sure.

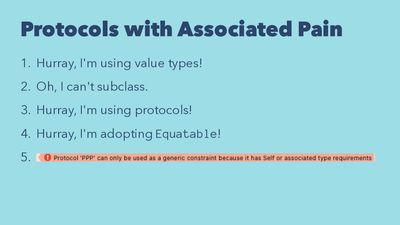

Protocols with associated types — why do they matter? The first reason is that they seem to cause a lot of pain. If you look on the internet, you see a lot of reactions a bit like this.

"Yes, I'm using value types, I'm using Swift, this is awesome!"

"Oh, I can't subclass value types! No problem, okay — I'm using protocols, and they're Swift protocols. Awesome!"

"All right, I need to implement

Equatable, because equality comes up a lot — "

And then, bam, right away you get this error:

Protocol whatever-it-is can only be used as a generic constraint, because it has Self or associated type requirementsYou hit this this as soon as you're working with a protocol with associated types.

And at first you see this, and you're like, "What? What does this mean? Why is this happening? Is there a quick fix, is there a way I can go in and fix it quickly?"

And there's not.

When this comes up it often requires you to radically restructure your code. You're not going to be using protocols in the way you thought you were using them.

What the error is saying is, you can only use a protocol as a generic constraint, which basically means you can't use them for variable types.

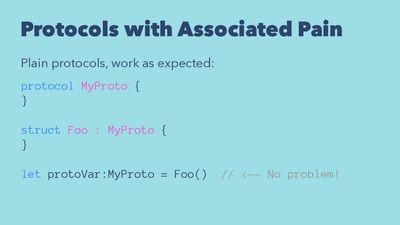

That's confusing, because normal protocols work something like you see here.

This is what we were all taught in OOP school, right? You've got your protocol, and then you have your type. Here it's a struct but it could be a class. And then you can define a variable, protoVar, which has the type of the protocol.

And now when I create an instance of my struct (or my class) Foo, because it adopts that protocol I am able to assign the instance into the variable. That's great.

And then in downstream code, I know that I'm working with something that's a MyProto. I can forget what it originally was. Maybe someone handed it to me and all I know is that it's a MyProto, so I know what its interface is, so I can call methods on it, no problem.

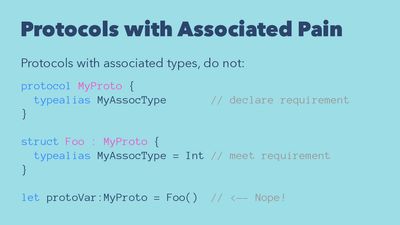

But in protocols with associated types, that's not what happens.

As soon as you have a protocol with an associated type (which you will have as soon as you add a typealias declaration inside the protocol definition), things are going to work differently.

The typealias declaration is declaring a requirement. Every adoption of this protocol needs to say what that typealias is.

So here it's an adoption of the protocol for my struct Foo. I'm saying Foo is adopting the protocol MyProto, and for its adoption of MyProto, the MyAssocType type, let's say, is Int, to meet the requirement.

But now, when I try to write that code at the bottom —which is probably the kind of code that I used to write for protocols every day of my life!-- I get that error.

But, oftentimes people will not encounter this first when they are adding an associated type explicitly. As I mentioned, usually the first place people run into this (at least the first place I did), is when you're doing anything that involves equality.

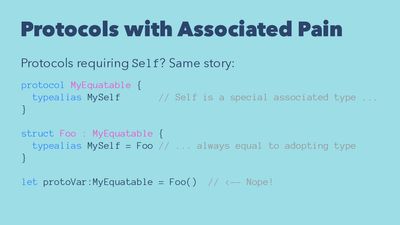

So what's the connection there? I'm not 100% sure of this, but my understanding from staring at this for a wuile is that basically any time you use Self with a capital S, Self is kind of like a built-in associated type. It's sort of a special associated type that's pre-defined to be spelled Self, and whenever you're adopting a protocol that uses Self, it's pre-defined that the adopting type is equal to the Self alias, or the Self type name.

If we were defining our own version of Equatable for a Self it would be a bit something like this. You'd have something MySelf, and the adopting type would have to be the one set equal to MySelf, and that is exactly the situation we had before when we adopted the protocol explicitly.

That's why the same error covers both those two cases. You're using Self or you're using an associated type. The reason those things go together is because Self is actually just a special case of associated types that's built in, because it's a commonly needed one.

And then, as soon as you say something's Equatable, you can't use it for a variable. Nope, can't be done, you get an error. So that's a bit of a surprise the first time you encounter it.

What does that mean?

First, it means that protocols with associated types are different enough that I suggest we stop calling them protocols with associated types. Also, my tongue is just going to wear out if I have to say that a hundred times during this talk.

So that's why I call them PATs. Makes them seem much more friendly and approachable. Protocol with associated type. PATs. And PATs are a bit weird.

Or at least they seem that way when you first run into them. So why? Why are they like this, and what's the best way to make use of them?

There's that saying that when you understand, you forgive. So I wanted to understand where protocols with associated types were coming from, in order to forgive them for being a bit frustrating. And this is a journey into trying to understand PATs.

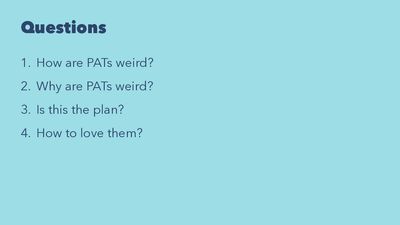

I've got four questions that I want to address in this talk, and I will feel good if I've given at least partial answers to all of these, and made things a bit clear.

So the first question to think about is, how are PATs weird? Let's really name exactly the ways in which they are surprising or frustrated.

And then the second question is the deeper one, why are PATs weird? Why were they made this way? What's the trade-off that motivated producing these effects in order to produce some other kind of benefits? What's the point of this? Why are they like this? All the guys working on the Swift team, very capable guys and gals. I'm not saying they're making a mistake! They know more about this than I do, so I'm just trying to understand where they're coming from.

The third question: is this the plan? The things that are a bit frustrating about protocols with associated types. Is this how it's supposed to be? Is this how it's going to be indefinitely? Or is this work in progress stuff?

And then the last point is: how to love them — how to forgive them and understand them and make the best use of them.

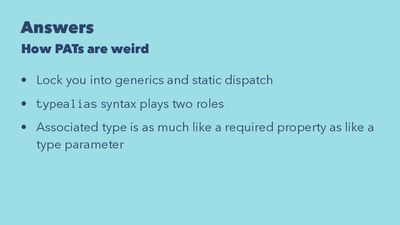

So let's dive in. First, just to quickly review how PATs are weird. I'm going to name three key aspects of their weirdness.

So the first one, like I mentioned, is this one that they're "only usable as generic constraints."

Now, why is this weird? This rule excludes PATs from literally every single use of protocols as defined in Objective-C. Literally.

The proof of that is quite simple. Objective-C lacked constraints. So in Objective-C, all of the protocols that you used in Objective-C, none of those were being used as generic constraints.

PATs can only be used as generic constraints.

There's literally no overlap, QED.

So that's a little bit odd! They're called protocols, but literally every single protocol you ever wrote before, you could not write it with a PAT.

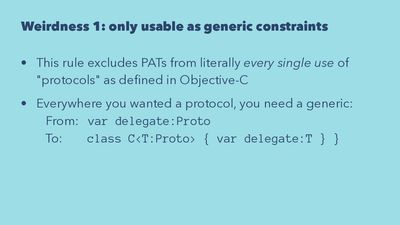

One effect of this is that everywhere that you wanted to have a plain old protocol, everywhere that you wanted to have for instance a delegate variable with a Proto type, now, in in order to use a PAT, you will need to have a generic constraint instead.

So now, where I would have had a var declaring a delegate variable inside a class, well now that class will need to be a generic class, and it will need to be generic with a type parameter T, with a constraint protocol, and then the delegate declaration will get that type parameter T.

So everywhere that I would have had a simple protocol property, I now need to have a generic context for it. So the result is that now my whole class has become a generic class, which maybe is not what I wanted!. This seems like a big change just in order to be using a PAT.

And another consequence of this is that, once you've done it, once you've switched to using a PAT instead of a plain old protocol, you've lost some things.

That little change did not get me exactly to where I was before, because now I've lost dynamic dispatch. And dynamic dispatch might have been the reason I was using a protocol to begin with!

Just to refresh here, if that were a plain old protocol, and I had an array of them, then that array of protocol objects, if it was just plain old familiar protocols, they could have had underneath a heterogeneous array of different kinds of types that were conforming to the same protocol object. And then I could just go through them, knowing only the protocol. I don't know what's underneath it, but I know it has this method foo, and I call foo, and then dynamic dispatch is going to do the foo thing A if it's type A, it's going to the foo thing B if it's type B. That's dynamic dispatch, that's subtype polymorphism. It's useful.

But once you make this change so that you're using a PAT, then you're forced to use a generic context, and then the array that you get is not an array of Proto objects. It's actually an array of whatever the type is that adopts the protocol. So you're forced into a uniform array, and you no longer have dynamic dispatch, and you can no longer have a collection of delegates where all you know about them is that they all conform to the PAT. Instead you've got a collection of delegates where they're all some single type that is obliged to conform to the PAT.

So you've lost a significant language feature by making this switch to PAT — and that may have been the very feature you were depending on when you decided you wanted to use a protocol to begin with!

So this "only usable as a generic constraint" feature is genuinely restrictive, and I think it's fair to be surprised, especially because when you look at the docs and communication from Apple, there's a lot of language that describes protocols as "real types" in Swift.

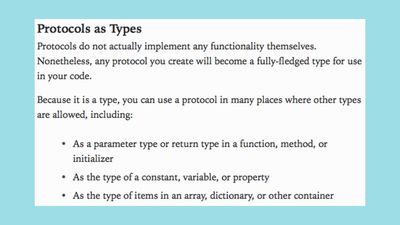

Apple is very clear about this.

Here in the Swift Programming Language book, it says,

Any protocol you create will become a fully fledged type for use in your code. You can use a protocol in many places where other types are allowed. As a parameter type, return type, type of a constant, variable, property, type of items and arrays, dictionaries.

That's actually a list of all the places that you cannot use a protocol with an associated type! Literally. Those are all the places you cannot use one. Which is I guess why they have the word "many" in there, just to make it a bit ambiguous.

And there's Apple's "Protocol-oriented Programming" talk. Any type you can name is a first-class citizen, when he's talking about protocols.

So if you find this behavior of PATs confusing, if it surprises you a bit, relax. Your brain is functioning properly. You should be confused by it, I think.

This expectation that they're "real types" is the second weird thing about PATs.

The third thing that's a bit weird about PATs is the way the typealias keyword works. So let me say a little bit about that, as I think it's the natural entry for diving deeper into the topic, and getting a sense of where they come from and how to understand them and appreciate them and enjoy them.

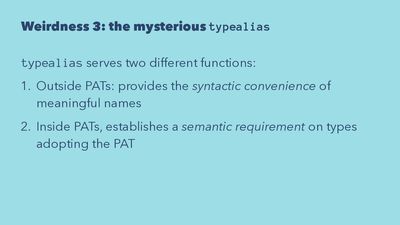

typealias serves two very distinct functions in the language right now.

The first function is, any time you use the identifier typealias outside of a PAT, it's really just a syntactic convenience. It's like a typedef. It just gives you an opportunity to create a shorter and more meaningful name for what could be a long and awkward type name. I think it would be correct to say that it's not doing anything semantically. If you had a really great short term memory, you could go through and take all the typedefs and typealiases, and just throw them out and replace them with the fully specified name.

It's not concealing anything or requiring anything or doing anything, it's just saving your brain from having to keep track of a longer and more opaque name.

But inside a protocol with associated type, as I mentioned earlier, typealias is doing something very different. Typealias is actually establishing a semantic requirement for adopting the protocol. So it's establishing the requirement that any type that adopts this protocol needs to implicitly or explicitly specify which type is associated with this typealias.

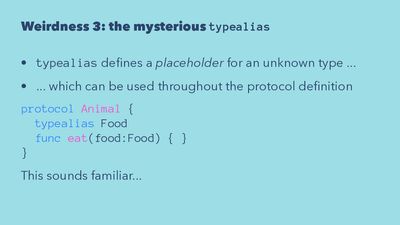

typealias is, in this case, effectively defining a placeholder for an unknown type.

For example here's a protocol Animal, and every animal eats some kind of food, and let's say Food's also a type, but until I know which animal adopts it I don't know what kind of food it is.

So I can say, "Well, there's some type we will call Food, but I don't know the exact type yet. Whatever type wants to be an animal, it needs to have a function eat, and eat means you need to eat this Food type, and whatever it easts — that's your preferred food."

This requirements-defining role of typealias is intriguing, because it sounds a bit familiar, doesn't it?

You look at this, and you think, "Well, typealias introduces this identifier that I fill in later. This feels a lot like a generic type parameter."

That's another case where we have syntax that specifies that we're introducing an identifier that will be a placeholder for a type that's not known yet, but can still be used throughout our definition.

So for instance here, if I were defining a struct Animal that's a generic over another type parameter, I could use Food as the name of that parameter, and then I could say, "if I create a specialization of Animal on a particular type of Food, then that specialization eats that type of food.

But that leads to a natural question: "Well, all these things that are odd about PATs, is that because PATs are really under the surface or conceptually best understood as generic protocols? Where associated types are like type parameters in generic types? Are they really just generic protocols, with a little bit of a wacky syntax? Does that explain everything?" This was the road I started going down first.

No. The answer's no.

They're a bit like that, but I don't think that's the most productive way to think about them.

And they would be different if they actually were like that.

But they are a bit like that.

So, "No — and yes, sort of".

But asking why explains a lot, and reveals what I suspect is probably the thinking that drove this feature, though I'm speculating a bit.

Let's go to question number two, having now laid out how PATs are weird. Why are they like this?

In other words: what is the problem that associated types solve?

They can be used for a number of things, but I think a good way to get at it is to say that what associated types do is they solve problems where you can't represent type relationships through the tools that you have with normal object oriented programming based subtyping.

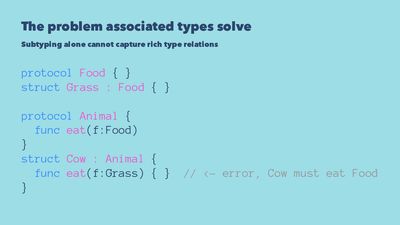

This is a classic example. Let's say I have a protocol, Food, and then Grass is a kind of Food, so it's adopting that protocol.

And let's say I have a protocol, Animal, and every animal eats some kind of food, but here I'm not using associated types. I'm just saying animals eat food.

Now let's say I want to specify that a Cow, which is a kind of Animal, eats a particular kind of Food, Grass.

If you try to do this, you get an error. And this isn't really specifically a problem with protocols. You'd see the same thing here if I defined food as a class and grass as a subclass, and animal as a class and cow as a subclass. In that case I'd have an override there.

This is not an issue with protocols. This is an issue with having only the hierarchical structure that inheritance and subclasses give you.

The error is because, if you tried to do that, having an override for a subclass or just adopting it a protocol, you've violated the substitutability requirement for a subclass.

Everything that's an animal needs to be able to eat type food, so if I create a kind of animal that doesn't eat food in general, that only eats grass, then I can't take an instance of that. I can't take a cow and just give it to you to use as an animal.

Let's say you had a function that took an array of Animals, and it went through all of them, and it just gave them all Food objects. Maybe the Food is, I don't know, Meat. Then what if one of those animals is actually a Cow and only eats Grass? Then that would fail.

Because of this, you can't actually represent this kind of type relationship with normal object-oriented subtyping. I think the technical language for this is talking about covariance requirements on the arguments of the methods. I don't know if that clarifies it, but it's impressive language!

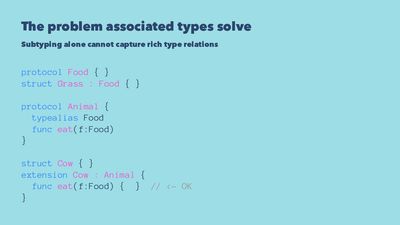

But with an associated type, you can be more precise.

You can say, "Every animal eats some kind of food," and when you declare what you want to be an animal, you can just specify what kind of food it is, and now it's okay.

So this is a toy example, but it gets at what protocols with associated types are trying to do — they provide a way for expressing type relationships that you can't fit into an object oriented hierarchy.

Okay. That's a bit of context, but it doesn't really explain this question of why they work quite the way they do.

Specifically it doesn't explain why aren't they correctly thought of just as generic protocols? Why aren't they defined using the syntax that you'd expect for generic protocols?

Because you could imagine that, in some imaginary version of Swift, this is how it would work. You have a protocol for animal, and it's parameterized on the type parameter which we've called food, because we know our meaning for that is food, and then if I wanted to say that cow was adopting this, I'd do it by saying, "Cow is adopting animal. What kind of animal? Okay, animals that eat grass."

That's how it could work. So why doesn't it work this way?

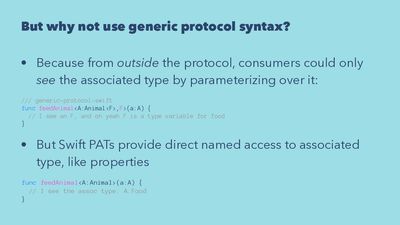

This part's a bit subtle, but I think the answer has to do with the way that the typealias-style definition of an associated type, as opposed to the generic parameter style definition of associated type, exposes that type.

Let's say we did have generic protocols in this way, and you are consuming it. Then from the outside of the protocol, the only way you can see the associated type in order to declare constraints on it or do other things with it is by parameterizing over it. If I wanted to feed an animal, I have to say, "Feed animal A," it's a type of animal, oh but I also need to have the F in there, and the F appears on the feedAnimal signature. But then inside the implementation of Animal, you'd be able to see F, because it's one of the type parameters that you are parameterizing over for the definition of that generic function.

But with a Swift-style PAT, because the typealias acts more like a member of the type, you have direct named access to it. So I still need to parameterize over Animal. (This is just because you always need to parameterize over a protocol with associated type you can't just pass it in as a reference. That's something you can't get around.) But once you're inside feedAnimal, you can directly figure out what that associated type is, just by accessing it with normal dot-based accessor notation.

And if you look at that notation, it gives you a hint of what's going on here. The syntax that's being used means that the associated type is a lot more like a property of a type than it is like the generic parameter that was used to defined a type. You're accessing it with a dot, and just like when I defined a protocol and say you have to implement this function, you have to implement this variable. Now we're also saying, you also have to specify this type. And then that type is accessible. It's just another one of the axes of customization that you're providing, any time you subclass something, or any time you implement a protocol.

So that's a difference, but you might have been looking at that and saying, "Well, that version on the top doesn't look so bad. Okay, my type parameter appears on the signature of things that are consuming the protocol. Is that so bad?"

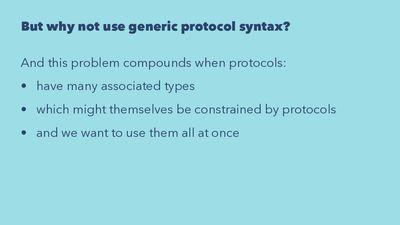

Maybe it's not so bad for simple cases like this, but it turns out that this problem gets a lot worse when you're doing something heavier weight with it.

If you have many associated types — not just "I eat this kind of food", but also "I need to live in this kind of dwelling", and then "I need to get this kind of drink", and then "I need to be near this other kind of animal" — if you have a whole bunch of associated types in the protocol, it gets more complicated.

And it gets much worse if the associated types themselves have associated types, and also if you're using a lot of protocols simultaneously.

When you add all of those things together, then the type constraint specification that you would need to put on the consumers of these generic protocols gets very complicated, because all these factors are being exposed to the consumer of the protocol. They're not embedded in the definition of the protocol.

Now I'd like to have a really tidy example here, where I show you how it gets bad with the imaginary animal example in Swift. I started working on that, but I confess, it gets messy right away, so I think it's a bit more useful to just look at other examples from other languages that did this.

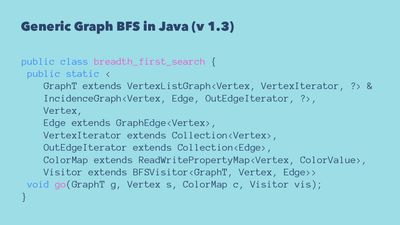

Here's an example of breadth-first search of a generic library for doing a breadth-first search on a graph, in Java version 1.3. If you specify the command to start the breadth-first search on this generic graph library in Java, that is the type specification you see on the signature.

What you're seeing there is a result of the fact that the type constraints involved in the associated types don't get embedded in a PAT-like structure. Instead they're pushed out, and you have to interact with them from the outside and specify them and maintain these invariants from the outside.

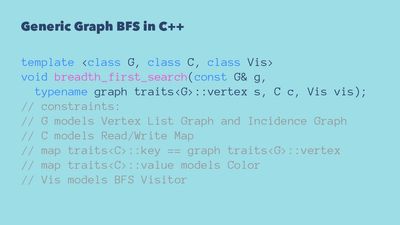

Whereas in contrast, if you do a similar implementation of a generic graph breadth-first search in C++, which ends up having better support for this kind of thing, the actual signature is much simpler.

Here you can see there's only three type parameters instead of one, two, three, four, five, six, seven... Instead of eight type parameters. And you can see what's going on there in the traits declaration. There's typename graph traits G, vertex s. What that's saying is, okay, I'm generic over the class G, which is the type that defines the type of graph I have, and from that type I can get the associated type vertex, that tells me the type for the vertex.

So if I have a graph where every node is an integer, and I just tell you, this is the type that defines the graph, you can access the fact that that type requires that the types of the individual nodes is integers. You don't need to separately specify that.

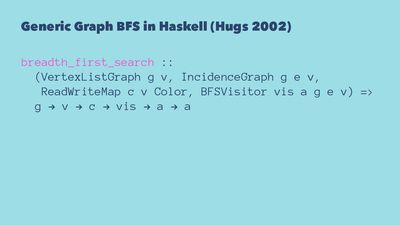

This is a functional programming conference, so I feel like we have to put Haskell on the board as well.

This is old Haskell. This is Hugs 2002. But this is showing similarly that you get this simpler syntax when you have this ability to embed information about the associated types.

Now, why am I showing examples from 2002, 2003? The reason is, I didn't come up with these examples myself!

These were examples that I encountered in the course of trying to understand the motivations behind the Swift design, and I think the magic key for understanding this more deeply is this document I found, which is a paper published finally in the Journal of Functional Programming in 2007 — "Extended Comparative Study of Language Support for Generics." And this reviews in detail the generic support in Haskell, Standard ML, Java (1.3 at the time), C#, C++, a language called Cecil, and Eiffel.

It implements a significant generic graph library in all of these languages, and then it looks at what are the problems?

Oh, it was really easy to do in this language, it was harder to do in this language, it was okay in this language.

And then based on that work (which represents quite a few people working over a few years!) there's a very systematic discussion of, "Well, okay, here are the generalized statement of the language features. And this language has these features, this language has these features, these ones are important for this reason."

It's quite dense, but it's a great document.

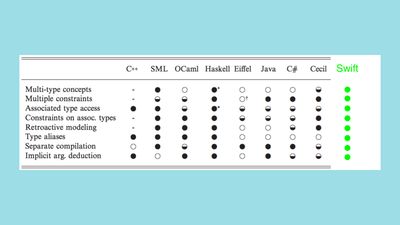

One of the outputs of it is this awesome table, where they have on the left a column that shows generic-oriented language features, and then the languages, just showing how they stack up.

So, "multi-type concepts" — that is protocols with associated types. "Concept" in C++ is a lot like a protocol with associated type. "Multi-type" means that you can have multiple associated types. Multiple constraints means you have lots of constraints on the types. At the moment, Swift lets you apply conformance constraints to the associated types, maybe there's more coming.

"Associated type access" — this is the thing I was getting at about how easily you can figure out what the associated type is.

"Constraints on associated types."

"Retroactive modeling" — that means being able to apply an extension to a type that someone else defined, or after it's been defined.

"Typealiases" — sounds familiar!

This was published finally in 2007, but if you go through and look at Swift, Swift ticks all the boxes. It does a great job of it. Bravo, Swift team!

And I don't think it's entirely a coincidence. I would be astonished if they had not read this.

In fact, I think there's a lot of circumstantial evidence that they have. For instance, a lot of the terms that this paper invented to describe these features, it invented in order to describe them in a general way. It was covering different languages. So some of these languages don't call them typealiases. Some of these languages don't call them associated types. But Swift does.

It seems a little bit like when they were thinking about how to define these language features in Swift, they sort of took this article, said, "Great, this is how we do it. Why don't we just call them typealiases? Because it seems like we want to have those."

I'm speculating, but there's something to this I suspect.

And also if you look at the redoubtable Dave Abrahams' talk, Protocol Oriented Programming, and look at the language he uses, there are times when the terminology he used shifts a little bit to language which is not in the Swift Programming Language book, but is in this paper.

So he talks about "modeling the concept," which is something that's in this paper, although that's also the language that comes from the C++ world, and all the Swift folks are deep into C++, so maybe that's it.

I think there's a lot of evidence that this paper was certainly insightful for Swift, and may have had an influence on the team that was working on the features.

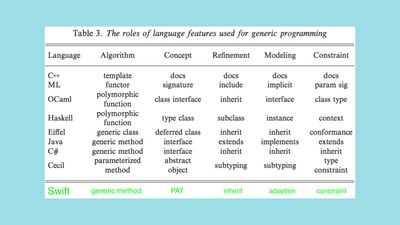

Another interesting table here is just describing how some of these different operations and procedures are named in the different languages.

You can see how things map up. What they call a concept — for instance a type class in Haskell — is most analogous to a protocol with associated type in Swift.

A refinement operation is one protocol inheriting from another, is what the SPL book calls it.

Modeling, that's what we call "adopting a protocol".

Constraints are called constraints.

So Swift fits in quite nicely.

I'm also aware that, when you look at some of the example I showed, if you're coming from a more purely functional background, you might say, "Well, Haskell actually has features that are even better for this."

But in fact, if you looked closely at this you saw there were asterisks here, because even excellent Haskell's support wasn't quite perfect, and developing this library exposed places where it was a bit awkward.

The reason that you might be thinking Haskell's great for some of these things is because, actually, this paper had a huge influence on Haskell!

This article by Simon Peyton-Jones and Chakravarty and Keller explicitly references the 2003 version of this paper, and the difficulties that it exposed in handling certain kinds of scenarios with the Haskell type system, and proposed amendments that are now part of Haskell for handling these things.

So it's all one. It's a small world and people are paying attention to each other.

Another relevant body of literature is the work around Scala.

Scala is probably a closer analogy than any of these other languages mentioned, because Scala is this hybrid thing where functional aspects of it have been done in a very deliberate and functional-friendly way. But it also needs to integrate with a pre-existing hierarchy OOP-based type system, the one that you get from the JVM.

If Scala were a column here, I think you'd see what are called "abstract type members" in Scala are the closest analog to associated types and PATs in Swift.

So that's a bit of a context on where it's coming from.

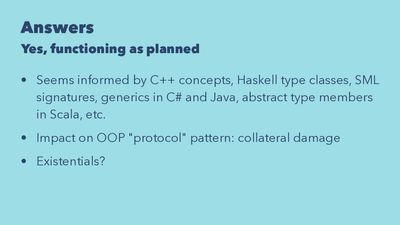

Question number three. Is this the plan?

Really? This is how it's going to be going forward, where we're going to have to make everything generic in order to use protocols with associated types at all?

Am I going to have to turn all of my code generic, just in order to deal with things to support equality?

I think for the moment, yes, it does seem that way. Although who knows what the future holds?

I know that certainly around August of this year, before WWDC, there were blog posts where people were saying, "Oh, I imagine they're going to fix this soon. This can't be the way it's going to be."

But when the protocol oriented programming talk came out, it seemed to say that this is the plan.

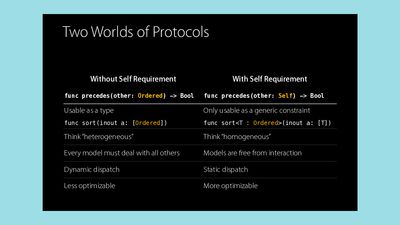

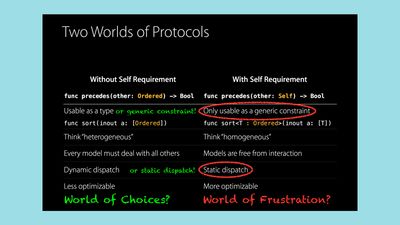

This is I think the key slide from Dr. Abrahams's talk Protocol-Oriented Programming. He he lays out the two worlds, where he's calling one the world without Self-requirement and the other the one world with Self-requirement. But this applies not just to the Self-requirement, but to any associated type.

You can see here the contrast — there's the contrast between dynamic dispatch, which is func sort(inout a: [Ordered]), and static dispatch, which is func sort<T:Ordered>(inout a:[T]), parameterizing over ordered things, because Ordered is a PAT.

And then you have an array there, or there at the bottom, dynamic dispatch, static dispatch.

Now if you were a skeptic, you might say that some of the things on the right side were not great.

"Only usable as a generic constraint" imposes a lot of requirements on how you use it. All I want to do is use Equatable, but now I'm stuck with generics and static dispatch.

Maybe are these two worlds? Is this the world of frustration?

I don't think it needs to be that way, but I can understand why people are frustrated when they bump into this issue and don't understand the motivation behind it.

But one thing I think that this slide doesn't represent is that the other world, where you don't have PATs, is not limited to those things.

Maybe this world on the left is the world of choices. Like yeah, you have the option of dynamic dispatch if you're not using a PAT, if you're using a plain protocol. But you can still do static dispatch as well, if you're not using a protocol, so it's not like you're locked into dynamic dispatch on one side, and you're locked into static dispatch on the other side.

Actually there's one side where you can do either, and then there's another side where you're stuck with static dispatch. You could use types as generic constraints, if you wanted to, on the non-PAT side of the protocol world.

But based on this talk, you might get the impression that this is the intended design of the language, and these restrictions around PATs are things we should learn to live with and embrace and appreciate.

I did put a little asterisk on that "yes", and the asterisk represents existentials.

Now, I haven't reviewed this material very deeply — in fact, Colin pointed it out to me about 30 minutes ago, to be honest, which was embarrassing. But "existentials" seems to be the name that you'll see in Haskell or the world of statically polymorphic, parametrically polymorphic languages, to describe the kinds of dynamic dispatch that you'd get in object oriented languages.

So basically if you want to have a PAT and then have a reference to it, rather than have to use it as a generic constraint, what you would need for that is for Swift to support existentials.

And are they coming? I don't know. We don't have anyone here from the Swift team to tell us the truth, but if you go and look inside the standard library, you do see some evidence that they regard it as a bug that they don't exist yet.

For instance, they have a FIXME. So there are features in the standard library right now that are described as, well, workaround for the inability to create existentials. There's a radar for it.

So maybe existentials are coming down the road, and in that case it will be a better world. In the meantime, there are workarounds that you can define, and indeed you can see examples of them in the standard library. Types like AnyGenerator or AnySequence are type-erasing wrappers that you can define in order to work around the fact that the language doesn't define existentials.

You can also define your own. It's a somewhat irritating exercise, but it can be done. So for instance, I found myself building one for AnyInterval. There's a protocol with associated type, a PAT, that defines the interval type, but that interval type has an associated type which is the type of the scalar value that defines the intervals.

So let's say you wanted to have an array of intervals, where some of them were closed-open intervals, and other were open-open intervals, other were closed-closed intervals, in order to define an array of interval objects that represent all the possible values between 0 and 1 inclusive. If you want to just go through that array and check if something is contained by any of them, well you can't do that with a PAT. Because it's a PAT, you can only create homogenous arrays, so you need to create a type-erasing wrapper in order to create an array of interval objects.

It can be done, I can show that later if people are curious, but it's a bit of a nuisance. Maybe it'll become automatic if the language gains support for existentials.

But again, I'd say to understand is to forgive. And understanding why PATs work the way they do gives us some perspective.

Let's review the four questions, to pull all this into shape.

How are PATs weird?

-

I think the essence of it is that they lock you into generics and static dispatch, which maybe was not what you wanted. Maybe all you wanted was to be able to find equality.

-

typealiasas a keyword specifically is a bit misleading, because it does two very different things. One is handy, convenient syntax thing. The other is, it establishes a requirement on a protocol thing. -

And when you think about what an associated type is, I think it's as much like a required property as it is like a type parameter. That's a good guide to have in the back of your mind.

Again, why are PATs weird?

What's the motivation behind this?

The fundamental motivation behind PATs is to enable rich multi-type abstractions that don't fit into the pure hierarchy-like structure that you get with object oriented programming.

And why are they like properties of a type instead of generic parameters?

It seems like this is a very deliberate choice to work around well-known and discussed issues in the literature, where generic protocols don't scale well, and they don't really encapsulate the type invariants that they want to maintain in a nice way.

That's where they're coming from, that's why they work the way they work.

Now, are they functioning as planned?

I think they seem to be, in that they're informed by a lot of research, a lot of comparison with other languages and similar language features.

If you look at all those languages, all those languages are not as much oriented around dynamic dispatch as Swift is. (I mean, C++ has virtual functions, but for the most part the thinking that seems to have informed this feature is thinking that was focused on languages that were more static.)

That's where the staticness is coming from. I think the impact on our traditional object oriented programming notion of a protocol is collateral damage.

But maybe this isn't the end of the story, maybe Swift's going to get existentials, and then we can be able to use PATs in the way that we're familiar with using plain old protocols.

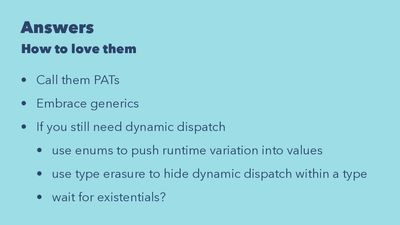

Last question was number four: How to love them? How to embrace PATs rather than calling them weird for half an hour?

I think the first step is: call them PATs.

Makes them seem so much more friendly and familiar. They're just PATs. They're not bad guys, they're just PATs. Also I think giving them a new name acknowledges that it's a new thing.

PATs aren't the Swift version of protocols from Objective-C. They're actually a new thing that has new capabilities. You can do certain kinds of modeling of multi-type abstractions in a way that you simply couldn't have done before.

It's not a broken version of an old thing. It's actually a new thing, deserves a new name, and that's a good way to think about why it also has new trade-offs.

So call them PATs, and if you're going to use them, then you need to embrace generics, at least at the moment, because they can only be used as generic constraints, so get comfy with that idea.

If you still need dynamic dispatch, or if you need something like dynamic dispatch because of the purpose that you had in mind when you decided you wanted to use a protocol, I have three bits of advice.

It's the end of this talk so I can't go into it in detail, but:

-

One option is, instead of relying on dynamic dispatch, use enums and push the runtime variation down from the type level to the value level. So instead of having a type, and then five different subtypes and using dynamic dispatch to pick the one you want, actually create an enum that has five different cases, and then inside it you could have an embedded switch, and that switch chooses between the different values that you want.

Maybe that's a better way to model your situation to begin with. In the example I was working on with intervals for myself, I have one solution with a type-erasing wrapper, another with enums, so there's workarounds.

-

The second option is the type-erasing wrapper to hide dynamic dispatch inside a type, and this is what the standard library does when it would like to have a plain old protocol or an existential, or whatever you want to call it.

-

Or if you still need dynamic dispatch, maybe wait a year and Swift 3-point-whatever will come out, and then we'll have existentials, and then we can all just go back to our old ways?

So that's my detective work on protocols with associated types and how they got that way.

When I started doing this, Apple was still a black hole, or an opaque wall. It was very hard to figure out what they were thinking, because of their secrecy. You could only get hints of it on Twitter.

But now, since the open source announcement a few days ago, since this new glasnost era of open discussion about where Swift is going, maybe we can just ask them, and then we'll find out, and it makes a lot of this detective work and kremlinology a bit unnecessary! So we can call them up later.

I'd be delighted to answer any questions.

Questions & Answers:

Hi, that was a very interesting talk, it explained a lot of concepts for me. Can you elaborate a little bit on what makes these constraints? I'm not really familiar with that term being used, in the context of a type being associated with a protocol. Is it just the fact that they introduce something that needs to be implemented? Or...

Do you mean the basic constraint that they're under, of they can only be used as generic constraints?

I guess, could you define "constraint" in this context?

Yes, okay. So there's a few senses in which "generic constraint" is the key to all this, so let me just focus on the most basic one at the very top, because I think that's what's puzzling about the error message the first time you see it.

If you look here, the error message you get when you try to create a variable that contains a protocol that uses Self (or a protocol that uses some other associated type, like I'm doing here at this bottom line of this slide), the error that you get is this one. And the exact language is, "protocol —whatever it is that you're trying to put— can only be used as a generic constraint." So let's really break that down, and what does it mean? The problem is that here, we're not using that protocol as a generic constraint. The protocol here called MyEquatable is being used as the type of a variable, of the variable protoVar.

So what would it mean to use it as a generic constraint? It would mean this case here. Here, let's say I have my PAT, protocol with associated type, or with a Self-requirement, that's called Proto, and in this line here where I say [inaudible 00:43:06], the only place the protocol appears is right next to the T. So when you see class is C, I'm defining a class, here come my type parameters. This class is generic over T, meaning every different T I could put in there would produce a different kind of C. But, here comes the constraint part, I can't just put any T I want in there. I can't just put in an nteger or a string or a whatever. It has to be a T that adopts the Proto protocol.

So that's what it means to say that the PAT protocol can only be used as a generic constraint. I can't use it as the type of a variable or of a function argument or of a return value. I can only use it in this little bit of language where I'm saying, "Okay, here's the type parameter, but it's constrained, and the constraint is, whatever type I fill in here needs to be a type that adopts this protocol."

So that's what it means. Does that answer the question?

Yes.

I feel like this is the essence of it, and it's very puzzling if you haven't done a lot of generic stuff before.

It's actually more of a follow-up comment, but when I first tried to understand it was very helpful for me to understand that, when you have the typealias, and you try to use the protocol, that you're actually working with an unfinished type.

There's still holes in the type definition that cannot be filled by the class or struct that implements that protocol. By using that generic constraint, you basically define who's going to implement it and who's going to fill the holes and then fulfill the final type specification, essentially.

Yeah, that's a useful insight.

And when I first had that thought, that's what led me to thinking that everything could be understood by just regarding PATs as generic protocols. Because you can't have an array of different specializations of the same generic type. I couldn't have an array that had the first element being itself an Array<Int>, and the second element being a Array<String>. An Array<Int> and Array<String> are different types, and you can't have an array that contains different types. And when I first started thinking about this, I thought, "Oh, maybe the problem, the reason I can't have an array of PATs, is because they could all be different under the hood."

But that's not actually the essence of it. Because if that were the only problem, if that were the only issue, that a PAT is a kind of a generic, and so they're actually diverse types, if that were the only issue, that would explain why you couldn't have an array of PATs that were differently specialized, but that wouldn't explain why you can't have a uniform array of PATs, and it wouldn't explain why you can't have a single variable that's a PAT. Because then there's no ambiguity. Then you have a specifically defined PAT that's being adopted with a specific knowable associated type.

So I think it's very useful to think about, in the sense that typealias defines a requirement that needs to be met, and it's an unfinished type until that's met, but that's not the fundamental reason why you can't use them as variables. The fundamental reason you can't use them as variables is that right now they've only built the machinery for static dispatch, and it seems like they want to build the machinery for dynamic dispatch as a thing on top of it, existentials, rather than the way people have done for... OOP programming.

I hope that's helpful.

Yeah. But that insight is what led me to think about, "Oh, maybe it's just because they're generic protocols." But I don't think that's all there is to it.

So I found myself in the same situation as you mentioned. I ended up with the solution of using enums, however at the end I wanted something to be a little bit easier to use, so I created a bind. And when I did the bind, because the bind is declared generically, I found myself back to square one, and I couldn't use it again without making binds specific again. How do you deal with that? Do you just have to do that switch statement again and again and again?

Yeah, I don't know. It's a problem.

Both of these workarounds — of pushing the dynamic dispatch-like behavior into enums, or pushing it into a type-erasing wrapper — involve a lot of boilerplate. Because let's say I have a plain old protocol, or even a superclass, that has 100 functions. And then I create different subclasses of them, and then you get dynamic dispatch on all of those functions. Whereas if you're using enums or if you're using type-erasing wrappers, I think you end up needing to create like 100 switch statements. I'm not sure about that, but that's been my experience so far. There might be some clever pattern in there, but I haven't found it. So it's a pain in the butt at the moment.

Thanks a lot for your talk, that was really great, definitely learned a lot of stuff about PATs.

A lot of the programmers that I admire and follow on Twitter reference academic papers like the one that you brought up during your talk. So my question is, where did you find that paper, or how did you discover it? And also is there a cool website or resource that I could use to find papers that touch on my interests? Sorry if that's a tangential question to the talk.

No, that's fine. I wrote a chapter of a book, I wrote the functional programming chapter and the generics chapter of a book on introductory programming in Swift. And when I agreed to write that because I didn't really understand generics. I thought, "Well, if I write a chapter on it, that'll force me to understand it really well." So I just went googling a lot on generics, and I'm trying to understand it in comparison to other languages, and I was also studying Haskell at the time, so I'd run into these issues.

And I think just googling on generics, I eventually stumbled into a reference to this paper. But then I found an old pre-print version of it. And one of the things I've... Just as a practical matter, when you find academic papers, they're usually locked up behind some paywall. But then if you look at the names of the authors and go to that author's website, then they usually distribute the PDF for free, or a PDF of an early version, and then if you decide it's worth it you can [inaudible 00:49:36] out the $50 to buy the official version.

So I just sort of googled around and then a bit of detective work to chase it down. But I thought this paper was really good. I mean, we have a community where we talk about knowledge issues, and there's blogs and talks, and the academic community is more separate from that than it should be, but this paper is fantastic. This was like four or five people working on this over years, with NSF funding, systematically developing different graph libraries. It's like a lot more work and thought went into this than putting together a blog post. And really I've just tried to communicate a little fraction of it. So I think it's really worth digging into these papers, whenever you bump into them.

Thanks a lot.

[crosstalk 00:50:25] Oh, yeah. That's a good one.

Lambda the Ultimate. Like lambda, as in lambda function. There's a website for programming language stuff.

This is just a quick comment. Six days ago there was a proposal on Swift Evolution that was merged into Swift 3 that will hopefully alleviate some of the ambiguity around the typealias keyword. It's going to now use associatedtype inside protocols, and in Swift 2.2 they'll deprecate use of the typealias keyword insight protocol definition. So...

[crosstalk 00:50:52] That's good. I like that. I'd heard about that, but... [inaudible 00:50:53]

So the reason I'm bringing it up is, part of the excitement around Swift being open source is that we can now witness and participate in precisely that evolution. But thank you for your... [inaudible 00:51:03]

Thanks. Yeah, I think that's a good development.

[inaudible 00:51:06] ... little bit more time for question... [inaudible 00:51:09]

Yeah, sure.

There's one at the back. Getting my exercise points.

Awesome talk, I learned a lot. Could you go into the definition of type erasure? I'm not really familiar with it. And maybe go into the AnyGenerator or AnySequence example? Like how that's an example of type erasure?

I think I'd do a better job showing the example I worked through than trying to provide a definition in the abstract. Let me go through the example. Maybe not right now, because there's other questions, but later, and that can show it. I think I'd have a hard time putting it in language well. Basically, you have a type that is concealing the fact that there were parameterized types underneath internally. So on the outside... So for instance, let's say I have AnySequence. So that's an example of a type-erasing wrapper that's in the standard library. If I had some type which was a sequence type, and it would be a sequence type of some particular element type, so let's say I have a type which is a sequence type of integers. Now, that type has two types associated with it. Well, integers, obviously, we know it's a sequence type of integers. But we don't just know that it's a sequence type of integers, it's a particular type. It could be a array of integers, or it could be some kind of list of integers.

So there's two types that you're managing in that situation. You're managing the element type, what it's a sequence of, and then you're actually managing the type of sequence type it is, [inaudible 00:52:50] an array, or is it a link list? You might have situations where what you wanted was just to have a type, any type at all, that represents a sequence of integers, and you don't actually care if it's an array or if it's a link list or whatever. So you can create a type, and that's the type-erasing wrapper type, which kind of disguises if it's an array underneath or if it's a link list underneath, and it only exposes that it's giving you a sequence-like interface to integers. So that's an example of a type-erasing wrapper that's in the standard library. So I'm not sure how to define that abstractly, but that's what it is. And if you wanted to get something like dynamic dispatch, you'd need that, because you want dynamic dispatch [inaudible 00:53:40] maybe you don't care about some type fact underneath, you just want to be able to know that you can call a method. And so if you get a type-erasing wrapper, then you don't need to use the type as a generic constraint, because you know that you have an AnySequence.

Is that a bit helpful? I can walk through an example later, but that's the gist of it.

And so final question, and then lunch and... [inaudible 00:54:02]

You showed the slide from Dave Abrahams' talk on protocol oriented programming, and I feel like that's a very helpful distinction between the two types. And I guess it's more a comment on the motivation. It seems like in this case... And if you look at what Swift it, it's very much like a bridge between two ecosystems, one of which we don't really know what the other end is, whatever Cocoa in Swift looks like. The app kit and UI kit are very much designed around dynamic dispatch and Objective-C, so do you think this is more of a scaffolding on the left side, the ones without the Self-requirement, that's more of a scaffolding, that's not for the sake of static type information, but really just until they have a nicer method to do dynamic dispatch?

I don't know. I think one question is, let's say you've got existentials. Then you wouldn't have this static dispatch on the right-hand side as the only way to do it. Would that give you functionally all the same things that you get, left-hand side? I don't know.

I don't think it would give you everything, but I think...

[crosstalk 00:55:25] ... it would give you a big piece of what's missing. So yeah, I don't know. That's the interesting question. When I first started looking through this, and especially looking at the thinking that seemed to inform this, I would have thought that this would be true a year and a half from now. This step [inaudible 00:55:44] existentials being marked as a... [inaudible 00:55:46]

Well, let's thank Alexis again. So there will be lunch, maybe it's not there yet, it will be there within minutes. A conference is not only about listening...